Vision +

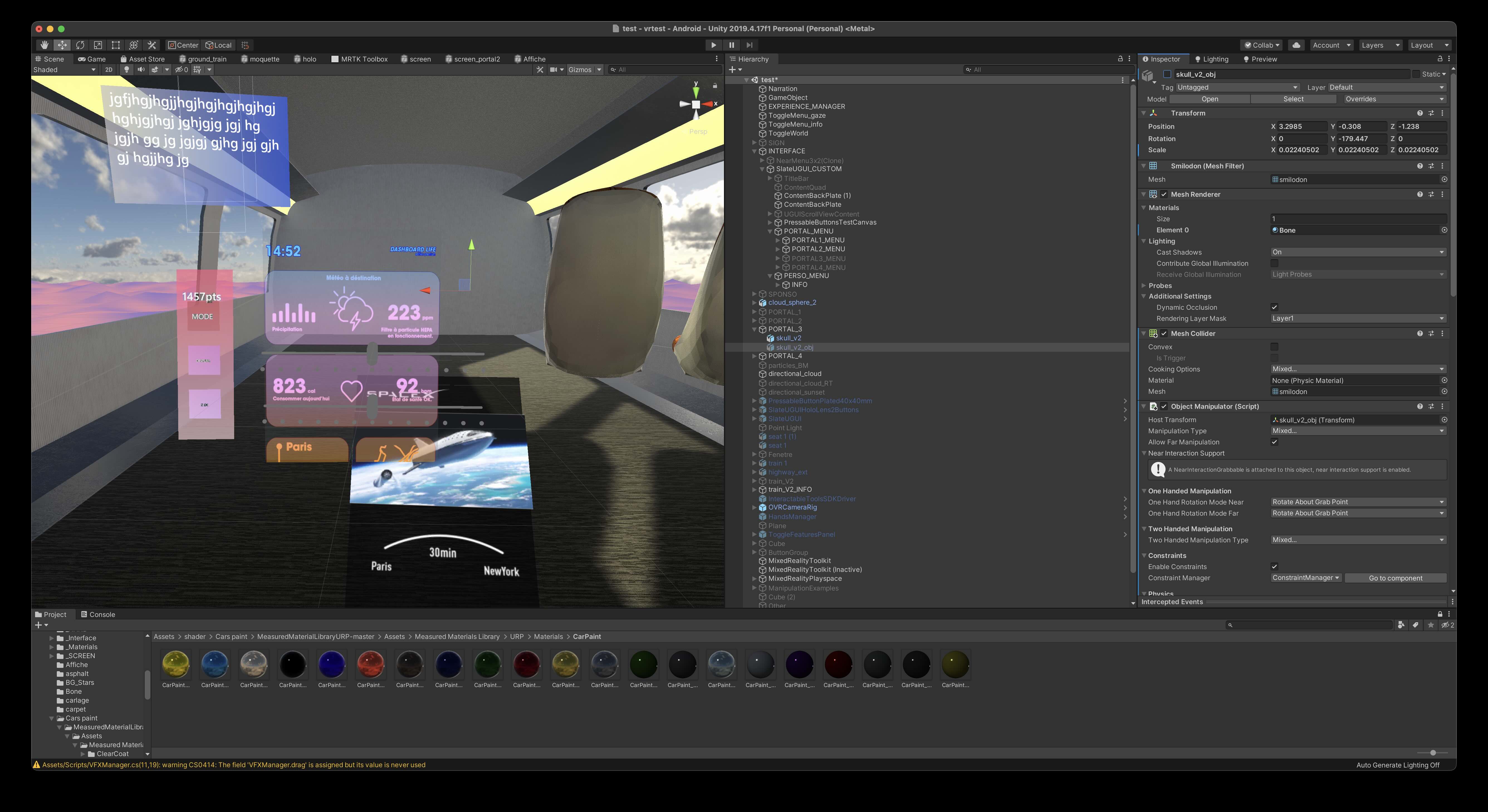

INTERACTIVE / Unity + MRTK + Meta Quest

Vision+ is a Unity/MRTK mixed-reality prototype exploring attention-based interaction and monetization patterns for near-future AR interfaces.

- I entirely built end to end functional prototype (concept → implementation → iteration → product)

- Designed the credit-based incentive system (rules, thresholds, edge cases)

- Business model use-case exploration

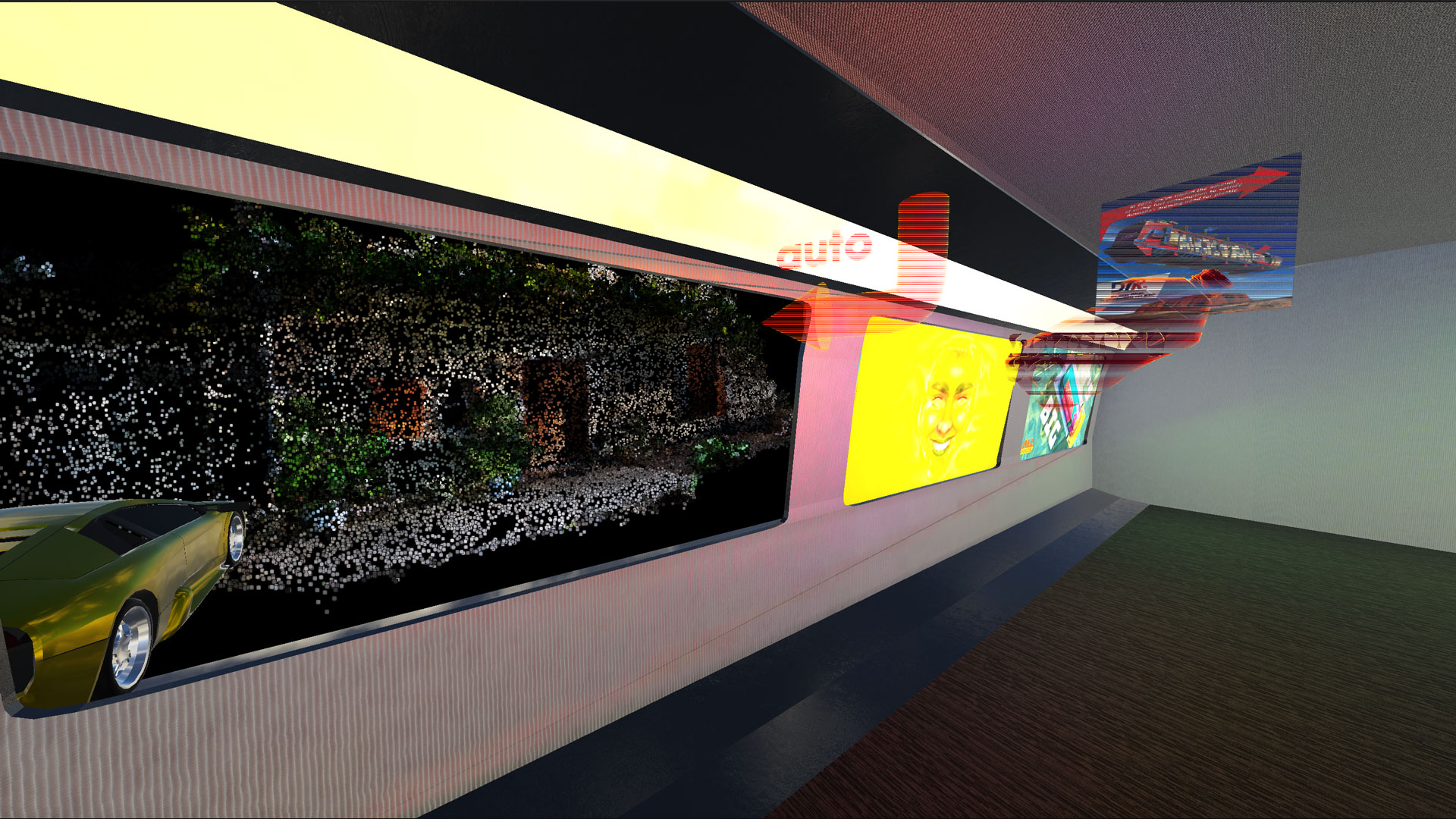

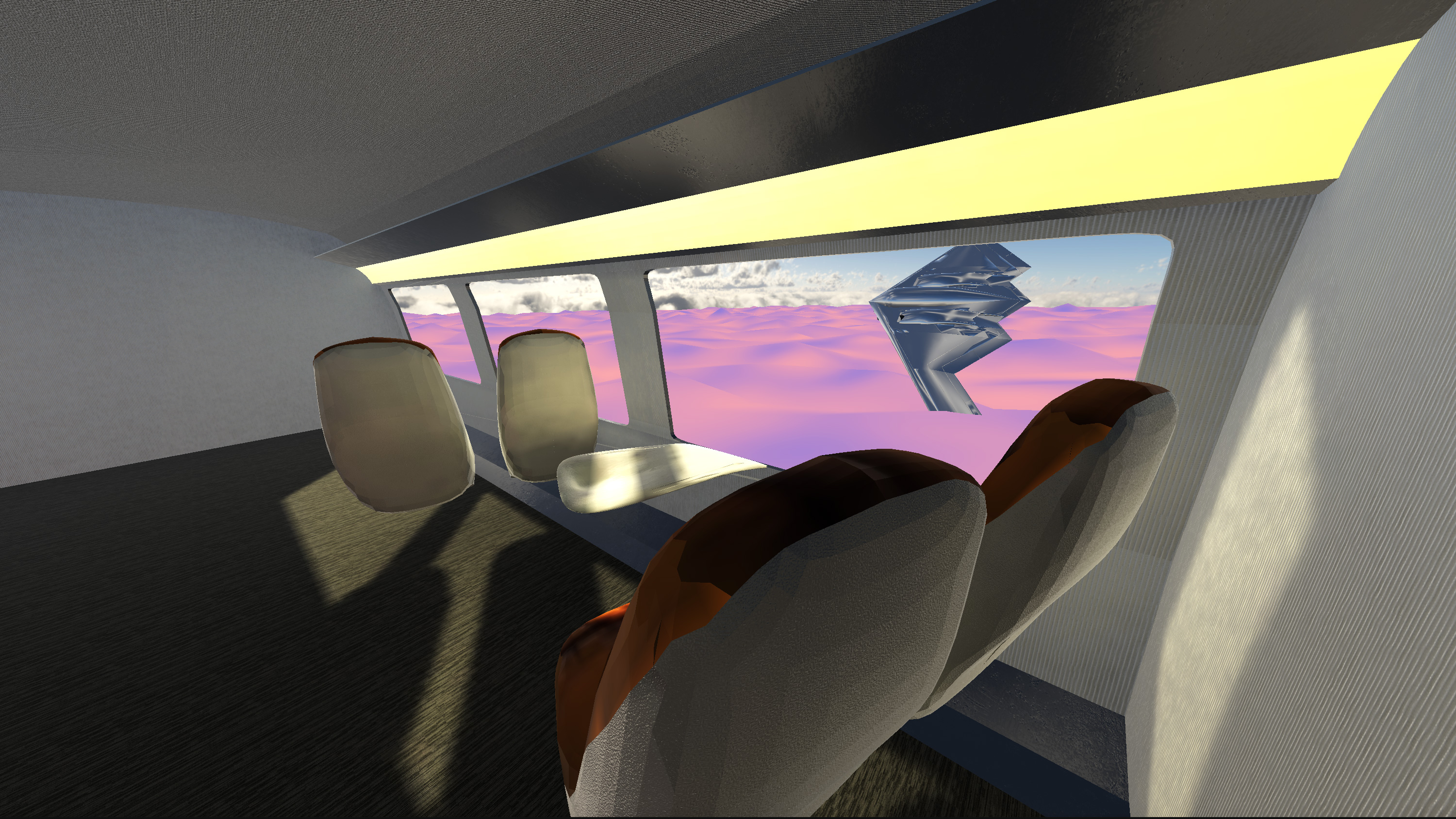

Vision+ is my end-of-year diploma project developed during my DSAA research year. It extends my work on interfaces—how screens mediate our perception of the world and shape the way we read and interact with our environment. Starting from early AR concepts like Google Glass, I explored a near-future scenario where persistent AR interfaces become normal: an always-on layer in the field of view, blending utility, personalization, and behavioral incentives. The project uses VR as a practical testbed to prototype what everyday AR interactions could feel like before the hardware is truly mainstream.

Hand tracking and virtual screen interactions

To get some points users have to look at sponsored content and interact with it

→ Tech and stacks :

Entierly developped on Unity/C# and Mixed Reality Toolkit from Microsoft. Built for Meta Quest 2

Complete MR interface integration.

Scoring based on user attention.

Performance-optimized the scene to run in real time on the Meta Quest 2’s mobile SoC.

Gaining some points

→ Key interaction :

Full handtracking

Virtual interactive overlay screen and button

Attention-credit loop: earn credits via meaningful interactions, spend to unlock ad filtering / alt content

Narative AI contextual recommendations simulation: interface acts as a constant mediator between environment and services

Points can be spend to access to an ads-free environement and cultural informations